Talk:Subpixel rendering

| This article is rated C-class on Wikipedia's content assessment scale. It is of interest to the following WikiProjects: | ||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||

Subpixel rendering on the Apple II

[edit]The article states "Whereas subpixel rendering sacrifices color to gain resolution, the Apple II sacrificed resolution to gain color." Steve Wozniak, the designer of the Apple II, would disagree with this claim. See [1] where he is quoted as saying: "more than twenty years ago, Apple II graphics programmers were using this 'sub-pixel' technology to effectively increase the horizontal resolution of their Apple II displays." (emphasis added). Drew3D 18:14, 1 March 2006 (UTC)

I've rewritten the section to explain the Apple II graphics mode in hopefully enough detail (perhaps too much!) to explain how it both is and is not subpixel rendering depending on how you look at it. Gibson is right if you think of the screen as having 140 color pixels horizontally, which is not unreasonable if you're doing color software, but when programming it you wouldn't plot purple and green pixels, you'd just plot white pixels (which would show up purple or green or white, depending on what was next to them and whether they were at even or odd coordinates). The Apple II did have a true subpixel rendering feature (a half-pixel shift when painting with certain colors) that was exploited by some software, but it bears no relation to LCD subpixel rendering as described in this article. Jerry Kindall 16:23, 2 March 2006 (UTC)

- Apple II graphics programmers used sub-pixel technology to increase the horizontal resolution of their Apple II displays. That is an absolutely unambiguous statement by Steve Wozniak, the designer of the Apple II. The paragraphs you added do nothing to disprove his statement, or even argue against it. Your claim that this "bears no relation to LCD subpixel rendering as described in this article" is false. There are differences in how exactly a pixel is addressed programmatically because one is an LCD display and one is an NTSC display, but the basic concept is exactly the same: instead of addressing whole pixels, you address sub-pixels, in order to achieve a higher apparent resolution. That is hardly "bearing no relation". That is the exact same concept. Drew3D 22:30, 3 March 2006 (UTC)

- There are no sub-pixels on an Apple II hi-res display because there are not actually any colors. The colors are completely artifacts. As Gibson describes it, programmers would alternate purple pixels and green pixels to smooth out diagonals, when in fact if you're drawing in white you can't help doing that because the even pixels are always green and the odd pixels are always purple! (Or vice versa, I can't remember right now.) Whether it's subpixel rendering or not depends entirely on whether you think of the graphics screen as being 280x192 monochrome or 140x192 color, but these are just ways of thinking about the screen and not actually separate modes! At the lowest level you can only turn individual monochrome pixels on and off, and if you wanted to be sure you got white you had to draw two pixels wide. The half-pixel shift with colors 4-7 is sort of like subpixel rendering, but it doesn't actually plot a fractional pixel but a full pixel shifted half a pixel's width to the right. I won't go so far as to claim Wozniak doesn't know how his own computer works, which would be stupid, but I wouldn't say he is necessarily above claiming credit for Apple II "innovations" that only become apparent in hindsight if you tilt your head a certain way. If you read Wozniak's original literature on the Apple II, he is clearly intending to trade off resolution for color, not the other way around. No Apple II graphics programmer (and I was one, co-authoring a CAD package called AccuDraw) thought they were plotting "subpixels" when plotting lines as Gibson describes, they were drawing white lines with a width of two pixels. The way the Apple II did color, while a clever hardware hack, was a complete pain for programmers and had to be constantly worked around in various ways. The typical question was not "how do I get more resolution from this color screen?" but "how do I get rid of these #$#! green and purple fringes?" Jerry Kindall 17:15, 4 March 2006 (UTC)

The Apple II section is incorrect, no matter what Woz said. The Apple II is not capable of subpixel rendering because every pixel is the same size - no matter what color. If purple or green pixels on the screen were half the size of white pixels, then you'd have subpixel rendering. If you disagree, if you believe that the Apple II is capable of subpixel rendering, then please show me a screenshot of a color Apple II display on which a colored pixel is physically smaller than a white pixel. Otherwise, the "Subpixel Rendering and the Apple II" section needs to be edited down to remove the irrelevant discussion of why 1-bit hi-res bitmaps suddenly take on fringes of color when they're interpreted as color bitmaps. - Brian Kendig 18:10, 19 March 2006 (UTC)

- Well, my entire point was that because of the position-based trick for generating color, you can't have a single, horizontally-isolated white pixel. All solitary pixels on a scanline appear to be color, and dots that appear white must be at least two pixels wide. This can be very easily seen on any emulator if you don't have an actual Apple II handy. The discussion of Apple II hi-res color is admittedly too long and contains too much detail for casual readers interested only in subpixel rendering, but some of that background is necessary to understand why Gibson is sort of right and sort of wrong, depending on how you think about color on the Apple II. I just wasn't sure what to cut, maybe I'll take another whack at it after thinking about it for a bit. Jerry Kindall 01:20, 25 March 2006 (UTC)

- I've been trying to understand this, but I'm still unclear on the concept! Are you saying that, for example, on a color Apple II display: a bit pattern of '11' represents a white pixel, but a bit pattern of '01' represents a pixel of which half is black and half is a color? So, in other words, you can't specify the color of an individual pixel; setting a color of '01' or '10' will make one pixel black and the pixel beside it a color, but setting a color of '11' will make two adjacent pixels white? - Brian Kendig 21:52, 25 March 2006 (UTC)

- You've nearly got it. A single pixel (i.e. the surrounding pixels on either side are black) is ALWAYS a color. Even pixels are purple (or blue) and odd pixels are green (or orange). Two pixels next to each other are always white. (Blue and orange are complications which can be ignored for now because they are analogous to purple and green. Each byte in screen memory holds 7 pixels, plus a flag byte that chooses a color set. Each horizontal group of 7 pixels can thus contain either purple/green/white pixels OR blue/orange/white pixels, but never both.)

- If you have an Apple II emulator, try this: HGR:HCOLOR=3:HPLOT 0,0. Note that HCOLOR=3 is described as "white" in Apple II programming manuals, but you get a purple pixel. Then try: HGR:HCOLOR=3:HPLOT 1,0. Again, you're still plotting in "white," but you get green! Finally, try HGR:HCOLOR=3:HPLOT 0,0:HPLOT 1,0. Now you have two pixels and both are white. (If you want to see blue and orange instead of purple and green, try HCOLOR=7 instead of HCOLOR=3. HCOLOR=7 is also documented as being white, but it sets the high-bit of the bytes in the graphics memory, inducing a half-pixel shift that results in the alternate colors.) Also note that if you do something like this: HGR:HCOLOR=2:HPLOT 0,0 TO 279,0 you get what looks like a solid purple line in color mode, but if you switch the emulator to monochrome mode (most allow this) you'll see that the computer has simply left every other dot black!

- Now try this: HGR:HCOLOR=1:HPLOT 0,0. The screen's still blank even though you've told the computer to draw a green pixel. Why? You have told the computer to draw in green (HCOLOR=1) but have told it to plot 0,0, a pixel which cannot be green because it has an even horizontal coordinate. So the computer makes that pixel black! In short, when you tell the computer to plot in a color besides black or white, it actually draws "through" a mask in which every other horizontal pixel is forced off.

- One last illusration. HGR:HCOLOR=3:HPLOT 1,0: HPLOT 2.0. You get two pixels that both look white. So we see that any pair of pixels comes out white. You don't need an even pixel and then an odd one, an odd one followed by an even one works just as well.

- Now, if you think of the screen in terms of pixel pairs, which does simplify things from a perpsective of programming color graphics (you can say 00 means black, 01 means green, 10 means purple, 11 means white, as long as you don't mind halving the horizontal resolution), then you have two "subpixels" and these can indeed be used to smooth diagonal lines as Gibson explains. However, most Apple II programmers wouldn't think of it that way. Since the colors are artifacts of positioning to begin with, what Gibson's talking about doing is exactly, bit-for-bit identical to plotting a white diagonal line two pixels wide using the full horizontal resolution. You're not really turning on a green pixel next to a purple pixel. You can't make a pixel green or purple; pixels are colored because of their positioning. You can only turn pixels on and off, and you get purple or green depending on whether they are at even or odd horizontal coordinates, and two next to each other are always white. (This all has to do with the phase of the NTSC color subcarrier at particular positions on a scanline.)

- This is way harder to explain than it should be. But then, it was way harder to understand back in 1982 (when I learned it) than it should have been, too. Apple II color is a total hack designed to get color into a machine at a price point that wouldn't allow Woz to include real color generation circuitry. Anyhow, perhaps it's time for a separate article explaining Apple II graphics modes? That could get linked from here and from Apple II articles and that way we could shorten this one up. Jerry Kindall 23:13, 27 March 2006 (UTC)

- With a little distance I was able to rewrite this section to be a bit shorter. Jerry Kindall 16:11, 6 April 2006 (UTC)

- I'm not sure how the Apple II pixels relate to NTSC TV picture elements, but the description still sounds like the programmer is controlling individual display elements (purple/green in Apple II; red/green/blue in most modern systems), which come together to form a single pixel. The elements have 256 levels of colour in an RGB display, so you can create 16 million+ colours, they only have 2 levels of colour in Apple II, so you can only create 4 colours.

...it doesn't actually plot a fractional pixel but a full pixel shifted half a pixel's width to the right.

- Hm. Isn't that a fractionally-positioned pixel? Isn't that the same thing you are doing in RGB subpixel rendering? I don't think you can make a 1/3-pixel vertical white line In RGB systems any more than you can make a 1/2-pixel vertical line in the Apple II. In RGB there are three colour elements, so you can effectively shift pixels right or left by 1/3, but that's not exactly the same as a full 3× horizontal resolution, is it?

- Pardon me if I'm misunderstanding. It sounds like the difference is that in RGB sub-pixel rendering we are controlling sub-pixels corresponding directly to elements in the hardware, while on the Apple II we are controlling pixels on a virtual purple-green matrix, which is further abstracted to the NTSC video monitor. Does that make sense? If so, then I don't think it's quite accurate to say that "it bears no relation to LCD subpixel rendering as described in this article," although there are differences to be noted. —Michael Z. 2007-06-13 23:11 Z

A different claim

[edit]Apple II really has the subpixel rendering. We agree that the color pixel effectively consumes 2 horizontal pixels in the monocrome unit. In the mono unit we have 280 horizontal points. The point is that, we can emulate 280 * 2 horizontal pixels. In this sense, the statement above by Woz and the claim by Gibson is totally irrelevant. (They mean 280 monocrome pixels are subpixel rendering of 140 color pixels.)

To see this, let us concentrate on the monocrome mode, i.e. using mono CRT monitor. Apple II's normal HGR (High-Res graphic) mode has 280 horizontal points. Let compare two commands.

- HGR: HCOLOR=3: HPLOT 0,0: HPLOT 0,1

versus

- HGR: HCOLOR=3: HPLOT 0,0: HCOLOR=7: HPLOT 0,1

The first one looks like a vertical line, but the second looks like a tilted line. In effect, we have only 1/2 unit shift, even in the mono unit!. If you draw a line in this way, we can make use of 280*2 = 560 horizontal points.

This technique is described in, for example Beagle Bros' tip #5. See [2], page 19. This subpixel method is used in rounding some fonts (of course meaningful in the mono monitors) in various games. Ugha (talk) 02:21, 12 February 2008 (UTC)

Actually, I found in the main text that the following was already contained:

- The flag bit in each byte affects color by shifting pixels half a pixel-width to the right. This half-pixel shift was exploited by some graphics software, such as HRCG (High-Resolution Character Generator), an Apple utility that displayed text using the high-resolution graphics mode, to smooth diagonals. (Many Apple II users had monochrome displays, or turned down the saturation on their color displays when running software that expected a monochrome display, so this technique was useful.) Although it did not provide a way to address subpixels individually, it did allow positioning of pixels at fractional pixel locations and can thus be considered a form of subpixel rendering. However, this technique is not related to LCD subpixel rendering as described in this article.

This is what I mean, and really realize fractional pixel positions. Anyway the last statement depends. In the sense we cannot completely control all the subpixels, this was not successful as LCD subpix rendering, but at least this was the primitive forms of subpix rendering. Ugha (talk) 15:08, 13 February 2008 (UTC)

Some samples would be nice

[edit]Could someone add some samples at 1:1 resolution for common LCD colour orders? It's be nice to be able to compare different techniques' output. --njh 08:47, 31 May 2006 (UTC)

- Agreed --Crashie 15:18, 22 March 2007 (UTC)

only lcd?

[edit]so crt's don't benefit from sub-pixel rendering? What is the term then for rendering sub-pixels to more accurately determine a pixel's color?

- The sub-pixels (red, green, and blue) inherently determine the pixel's color. It's already being done as accurately as it can be done given the hardware. I'm not sure what you're asking. Jerry Kindall 22:12, 6 June 2006 (UTC)

- I figured out the term I was thinking of, which is "oversampling" (I think). And that shows benefits on a CRT screen too. I guess you could think of oversampling as like the internal resolution you're using to figure out the final shade : on a fractal, for example, located entirely within a pixel, if the lines are black and the background is light, then one pixel's worth of resolution would imply that the pixel should be very very very light grey - but if you quad oversample, and calculate the fractal on a 16x16 grid, and then average all of the greyscale values into the single pixel, it will be a slightly darker shade of grey, and therefore more accurate. As you oversample greater and greater, you approach closer and closer to the color of the pixel if you actually zoomed in to that pixel at 1600x1200 resolution, actually rendered all that, and then took the average value of all 1,920,000 pixels. Of course, this isn't substantially better than just taking the time to render 16 pixels (4x4) in the place of that single value. However, I believe it's better to do this (render 16 sub-pixels to more accurately determine the color of a pixel) than to just render the single pixel without figuring out exact sub-values. I think the article should mention that "sub-pixel rendering" is about rendering sub-pixels specifically to actually use the three separate pixels each LCD (and only LCD) monitor is comprised of for each pixel, rather than "rendering" sub-pixels in order to more accurately determine a pixel's color. The article isn't clear enough that sub-pixel rendering is a "hack" based solely on the fact that LCD monitors don't have solid pixels.

- What you refer to is called antialiasing, and is somewhat orthogonal to that covered in this article. Freetype (and derived renderers such as libart, inkscape(livarot) and AGG) already computes exact coverage for each pixel (and over-sampling any more than 16x16 is pointless with only 256 levels). Over-sampling is but one method for achieving antialiased output. How would you improve the article to prevent confusion in the future? Please sign your posts. --njh 13:02, 7 June 2006 (UTC)

- Subpixel rendering != antialiasing. How is subpixel rendering a "hack"? —Keenan Pepper 20:49, 7 June 2006 (UTC)

- I think the misunderstanding was not realizing that subpixel rendering generally throws away the need to portray colours. It is usually applied as a form of anti-aliasing (though yeah, it does have some other uses), and it's a "hack" because the hardware wasn't really originally intended to be used this way. I don't think we should use the word "hack" though, given it's possible derogatory connotation. I've added "black-and-white" to the lead description, which might help this problem. (And in answer to the question about CRT versus LCD, it's that CRTs don't have as clear separation of the subpixels. CRTs are hitting glowing phosphorus (which "bleeds" a bit) with an electron gun from a distance, whereas LCDs have a direct electrical connection with the subpixel, which is much more accurate.) - Rainwarrior 00:18, 8 June 2006 (UTC)

- Phosphor != phosphorus. And colored text looks fine with subpixel rendering on my laptop's LCD. —Keenan Pepper 01:15, 8 June 2006 (UTC)

- Thanks for straightening me out on Phosphor. I'm not much of a chemist, as I guess is evident. As for colored text looking fine, it may, but the ability to anti-alias with this method given a colour context diminishes. You can still do a bit, but there's less to material to work with; it really is a black-and-white technique. (If the text is coloured, a few missing sub-pixels isn't really going to make it look bad, I suppose, but this is a subtle effect to begin with.) - Rainwarrior 06:18, 8 June 2006 (UTC)

- Though I might add to my above description: CRTs, unlike LCDs, don't have a 1 to 1 pixel to RGB location on their screens (which is part of the design which compensates for the bleeding effect); any given pixel might be covering, say, give different RGB segments on the actual screen. (Furthermore, even if they were lined up this way, not all CRTs arrange them in vertical strips.) - Rainwarrior 06:56, 8 June 2006 (UTC)

Perhaps, not the best place to ask, but does anyone know why subpixel rendering is not used for CRT? The geometry of the screen is known perfectly well and pixels are relatively distinctive

and keep their position respective of each other. So it should be possible to do the same as cleartype does, but apparently this isn't done. Why?

- Probably the best man to ask would be [3]. He explains it nicely in the FAQ section. --Josh Lee 01:42, Jan 28, 2005 (UTC)

- The software does not have enough info about the electron beam convergence error and the alignment of pixels to the apreture grill. Worse yet, this varies across the screen and even in response to magnetic fields. AlbertCahalan 18:08, 25 May 2005 (UTC)

Further clarification

[edit]I just realized that you misinterpretted me with:

- so crt's don't benefit from sub-pixel rendering? What is the term then for rendering sub-pixels to more accurately determine a pixel's color?

- The sub-pixels (red, green, and blue) inherently determine the pixel's color. It's already being done as accurately as it can be done given the hardware. I'm not sure what you're asking. Jerry Kindall 22:12, 6 June 2006 (UTC)

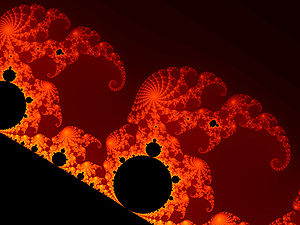

I didn't mean sub-pixels red, green, blue, I meant sub-pixels as meaning greater internal resolution. Antialiasing, which the topic above meandered off to, is specifically about JAGGINESS, e.g. within a diagonal line. But I don't mean compensating for jagginess. I mean, if you render ---------------------------->

entirely confined to a pixel, then if your internal resolution matches the physical resolution, you might well end up simply deciding on a very different color than if you decide to render it internally at a full 1600 x 1200 and then average all those pixels. For example, the thumbnail I inserted above has a DIFFERENT AVERAGE PIXEL VALUE than the full picture, which is at 1024x768. However, because the picture is a mathematical abstraction, we can render it even more deeply. (Approaching closer and closer to the full fractal). For example, I am certain that the full 1024x768 picture linked above was not merely rendered by solving the equation at each point at a center of a pixel. (ie the equation was not only solved 786,432 times). Rather, I bet the software that rendered that picture rendered probably a dozen points for each pixel, maybe more, to better approximate the final fractal. However, and here's the rub, some black-and-white fractals end up completely black if you render them to infinity, and others disappear (for example the fractal defined thus: a line such that the middle third is removed and the operation is repeated on the left and the right third, ad infinitum -- if you render this "fully" you get no line to see at all.) So I'm most certainly not talking about rendering green, red, blue pixels. I'm talking about rendering pixels that are at a greater granularity to your target, which I think is called oversampling. Of course, this applies especially to cases where a mathematical abstraction certainly lets us get exact sub-values. You can't apply it to a photograph at a given resolution to get more accurate pixels.

A Special Misunderstanding

- and over-sampling any more than 16x16 is pointless with only 256 levels

I don't think this is true for a few different reasons. First of all, when we're talking about full colors, the kind of rendering I'm thinking of is appropriate at 16 or 32 bits of colorspace. And secondly, the reason it still helps to have greater internal resolution is because when your view shifts slightly over time (as with a 3D game) the granulity of your final pixels reveals, over motion, finer detail. It's the same as a fence you can't see through because it leaves only little slivers open -- the slivers are like the final pixels -- but behind that, there's a whole lot more resolution you're just being shown a slice of, and as you move around, you can make out everything behind that fence, just from that one sliver. Or try this: make a pinhole in a piece of paper and look at it from far away that you can only make out a tiny bit of detail behind it (so, only a few pixel's worth of resolution). Now jitter it around in time, and you can see that because of how the slice changes you get a lot of the "internal" resolution hidden behind those few pixels. Or notice how a window screen (graininess) disappears if you move your head around quickly and you see the full picture behind it.

Sorry for the rambling. Is "oversampling" the only term for the things I've described? - 11:03, 10 June 2006, 87.97.10.68

- Oversampling is one method of accomplishing anti-aliasing (the most common). Often the chosen locations for oversampling are jittered randomly to avoid secondary aliasing (a sort of aliasing in the aliasing which sometimes occurs from too regularly oversampling), sometimes called Supersampling. An alternative method to oversampling is convolution with, say, a small gaussian blur, but this is usually more computationally expensive and is used in more specialized applications.

- As for your claims about accuracy, yes technically you should render each pixel in infinite detail, not just screen sized, to precisely determine its colour. In practice this is not possible, and if you remember that an actual pixel at 24bit colour only has 256 degrees for any colour component; there us a finite precision to the colour you can choose. Doing a lot of extra calcualtion for an effect that is generally subtle, and perhaps usually too subtle to represent once calculated, even with an infinitely detailed image such as the Mandelbrot, is generally considered "useless", yes. I would actually doubt that your Mandelbrot image uses any better than 4x oversampling, which would already quadruple the calculation time. (By the way, the image of the Mandelbrot is not really an "equation", it is an iteration of a function given a starting location, and whether that point goes infinite when iterated determines its membership in the Mandelbrot set; this is why it takes more effort to compute than most images, every pixel in that picture I would assume required about 500 iterations of this function (if oversampling, multiply that by the number of oversamples).) It's not worthwhile to take a many thousand times speed hit by doing a full screen oversample of each pixel when the expected error of only taking even just four samples is rather small. The difference between 4x and 9x oversampling is subtle, the difference between 9x and 16x becomes more subtle, eventually it becomes pointless. Yes there is a chance for error given any amount of oversampling (it is easy to come up with pathological examples, though with jittering even "hard" cases tend to work out pretty well), but on average it is very small.

- Sub-pixel rendering is often implemented as an oversampling at the sub-pixel level (3x oversampling). The term is not used to refer to oversampling in generally, but rather this particular application that hijacks the RGB sub-pixels to accomplish it. - Rainwarrior 15:56, 10 June 2006 (UTC)

- AWESOME REPLIES! The replies contain a wealth of information, and the second one confirms my initial suspicion way above, that "oversampling" is the general term of art to which I referred. As for the first response (unsigned though, above Rainwarrior's) Wow, very good. Awesome. The only thing I'm left wondering about, is whether it's fair to call, say, "800x600 physical resolution rendered with 9x oversampling" by the term "2400x1800 internal resolution 'scaled' to 800x600 physical representation".

The difference seems to be that "2400x1800 internal resolution" implies that there's no "Often the chosen locations for oversampling are jittered randomly" as you state. Secondly, you don't mention whether I'm right that the internal resolution can be fully "revelealed" through motion -- ie not just a "prettier picture" or "more accurate picture" but that through subtle motion the full 2400x1800 internal resolution can be revealed. A still picture can't represent 2400x1800 in 800x600 at the same color depth, since an average of nine distinct pictures at the larger resolution would have to map to the same rendering at the lower resolution. However, if you add a component of time/motion the full 2400x1800 internal resolution can be revealed in a distinct way. No two will look the same, since the internal resolution is fully revealed. However. we have no article on internal_resolution and your mentioning "jittered randomly" leaves me with the uneasy thought that this is just a way of getting a nice average. (The random jittering also would lead to a nice dithering effect if I understand you correctly, as long as it really is random enough -- if it's not random enough the jitter would lead to an interference pattern). I can give you an example if this is wrong, to show my thinking. I think all the articles in this subject can be improved to facilitate understanding, including especially anti-aliasing, interference_pattern, internal_resolution, jittering, oversampling, etc etc etc.

finally, it seems that my comment original comment "...was not merely rendered by solving the equation at each point at a center of a pixel" seems to imply that I have the correct understanding, which you reiterated with "By the way, the image of the Mandelbrot is not really an 'equation', it is an iteration of a function given a starting location, and whether that point goes infinite when iterated determines its membership in the Mandelbrot set". I think you'll agree I didn't call it an equation, I called it solving an equation for the color-value of each point, and the definition you mention "whether that point goes infinite when iterated determines its membership in the Mandelbrot set" is certainly the result of a function. Also, the coloring is a metric of "how fast" it goes to infinite (black spots don't), but either way each point solved gives an answer to a question, which question can be phrased as an equation. (If you read mandelbrot_set can you agree that your simplification applies to black-white pictures, and color pictures represent how steeply the point goes to infinite? (I have no idea what scale they calculate steepness by! But it gives some purdy colors.)

- AWESOME REPLIES! The replies contain a wealth of information, and the second one confirms my initial suspicion way above, that "oversampling" is the general term of art to which I referred. As for the first response (unsigned though, above Rainwarrior's) Wow, very good. Awesome. The only thing I'm left wondering about, is whether it's fair to call, say, "800x600 physical resolution rendered with 9x oversampling" by the term "2400x1800 internal resolution 'scaled' to 800x600 physical representation".

- It was just one reply, I broke it into paragraphs. Anyhow, an "internal resolution" implies things that may not be desired; first, it suggests that more memory might actually be required, but, for instance, in the case of 4x oversampling, the four pixels can often be calculated all at once without requiring the much larger image be stored; second, it disallows jittering (which is generally the cause). As with most things, how to do anti-aliasing is application dependant, ie. jiterring is not used in graphics cards because of the extra calculation in involves (this may have changed recently). Generating a large image and then down-sampling (usually an average) is one method of accomplishing anti-aliasing, but as I said, usually this is avoided so that the memory can be conserved (having more texture memory, for instance, might be important).

- Jittering does produce a kind of dithering. Its purpose is to reduce the effect that regular patterns below the nyquist freqency can have on aliasing. It doesn't reduce the error, but it distributes that error across the image, making it much more tolerable. Motion can indeed reveal the finer details of an underlying image (which jittering would destroy somewhat), but in most real-time applications where you're going to see motion, it's far more important to do the calculations quickly than completely accurately (want to watch that motion at 1 frame per second, or 30?). Whomever made the earlier comment about 16x16 being the maximum detail for 24bit colour is in an academic sense wrong about that, not only if there is motion (you could simply multiply his number by the number of frames that particular pixel covers the same area and the idea would be the same), but because resolving finer detail may indeed change the value significantly; the spirit of his point was correct though, the probability that the it will change significantly drops off rather quickly (excepting some special cases) as you increase oversampling size.

- As for the Mandelbrot, its original definition was: the set of points what would produce a connected Julia set, as opposed to a disconnected one. (I don't know if you're familiar with Julia sets.) So, technically, the Mandelbrot set is black and white; it would just take an infinite amount of calculation to resolve all of its finer details. The coloured images are usually produced by calculating mandelbrots at different levels of calculation. To call it "solving an equation" isn't very accurate because the solution is already known, the image of the mandelbrot is a numerical calculation. ie. the solution for the mandelbrot after 4 iterations is: , and I think you could see that there are similar solutions for further iterations, but what determines whether or not a point is in the set is whether or not this is large enough that it cannot possibly converge to zero after further iterations. If a point is determined to still be under a certain magnitude (a sort of "escape" radius) after 4 iterations, it is guaranteed to have been under that escape magnitude after 3, 2, and 1 iterations, thus each level of detail exists inside the set of points calculated by the previous one, and when a smooth gradiation of colour is used across these levels of detail, the terraced effect you have seen occurs. - Rainwarrior 17:55, 10 June 2006 (UTC)

- thank you, this is more excellent clarification. I'm still reading it, but wonder about the phrase "second, it disallows jittering (which is generally the cause)", as I look at the context above I can't determine what it is the "cause" of, or if you mean it's generally the case, can you clarify the preceding phrase? I'm still reading though. ( also am I correct that 1 fps vs 30 is just rhetorical, right, for more like 15 instead of 30 or 7 instead of 30? Unless the 4-fold (etc) texture size increase exceeds your available memory? computationally it's just linear, right? )

- I'm not sure whether I meant "case" or not, it might have been an unfinished sentence. What I should say is that jittering is used in a lot of applications (ie. fractals, raytracing), but not often in graphics card anti-aliasing because of its increased computation. The 1 vs 30 wasn't referring to a specific case, but if you were comparing, say, 4x oversampling to 128x oversampling this would be a realistic figure. My point was that greater oversampling incurs (except in special circumstances) a proportional speed hit. - Rainwarrior 22:55, 10 June 2006 (UTC)

- thank you, this is more excellent clarification. I'm still reading it, but wonder about the phrase "second, it disallows jittering (which is generally the cause)", as I look at the context above I can't determine what it is the "cause" of, or if you mean it's generally the case, can you clarify the preceding phrase? I'm still reading though. ( also am I correct that 1 fps vs 30 is just rhetorical, right, for more like 15 instead of 30 or 7 instead of 30? Unless the 4-fold (etc) texture size increase exceeds your available memory? computationally it's just linear, right? )

- As for the Mandelbrot, its original definition was: the set of points what would produce a connected Julia set, as opposed to a disconnected one. (I don't know if you're familiar with Julia sets.) So, technically, the Mandelbrot set is black and white; it would just take an infinite amount of calculation to resolve all of its finer details. The coloured images are usually produced by calculating mandelbrots at different levels of calculation. To call it "solving an equation" isn't very accurate because the solution is already known, the image of the mandelbrot is a numerical calculation. ie. the solution for the mandelbrot after 4 iterations is: , and I think you could see that there are similar solutions for further iterations, but what determines whether or not a point is in the set is whether or not this is large enough that it cannot possibly converge to zero after further iterations. If a point is determined to still be under a certain magnitude (a sort of "escape" radius) after 4 iterations, it is guaranteed to have been under that escape magnitude after 3, 2, and 1 iterations, thus each level of detail exists inside the set of points calculated by the previous one, and when a smooth gradiation of colour is used across these levels of detail, the terraced effect you have seen occurs. - Rainwarrior 17:55, 10 June 2006 (UTC)

Re: the Mandelbrot set pictures (more of which at User:Evercat), I wrote the program and yes, it did indeed use more than 1024x768 points (actually a lot more, though I don't recall the precise number, but it was probably at least 4 and maybe 9 or 16 subsamples per actual pixel), with the final colour for a pixel the average of the subpixels.... Evercat 21:29, 12 July 2006 (UTC)

Image (re-)size query

[edit]Just wondering if it wouldn't make for sense to resize the first picture. At full size it shows pixel images blown up by a factor of 6. I think it would make more sense to reduce the thumbnail image also by a factor of 6 to render the blown up pixels as 1:1 and prevent strange artifacts being introduced. —The preceding unsigned comment was added by 82.10.133.130 (talk • contribs) 09:33, 17 August 2006 (UTC)

- I think that particular image needs to be introduced as a thumbnail. It is a much better demonstration at its full size. Perhaps it would be better broken into three small (1:1) images and used later in the document, but what I think we should definitely do is choose a new picture to go at the top. The first image in the document should be a simple picture of subpixel rendering, not an explanation of it. Rainwarrior 18:50, 17 August 2006 (UTC)

- Agreeing with this eight years on. Opening the article with an image where the caption explains that it "does not show the technique" isn't very helpful to the reader. --McGeddon (talk) 17:10, 26 March 2014 (UTC)

Open Source Subpixel Rendering

[edit]I have just decided that ClearType is one of my favorite things about Windows, and I am wondering to what extent it is used and available in Linux.

Non rectangular sub-pixels

[edit]All the examples assume that LCD sub-pixels are rectangular, making a square pixel. But this isn't always the case - I've just noticed that my two seemingly identical monitors are different - one has the standard pattern, the other has chevron shaped subpixels (a bit like this: >>>) I don't think there's much effect on sub-pixel rendering as they're both based on a square, but it does explain why one always looks slightly more focused than the other.Elliot100 13:34, 14 September 2006 (UTC)

The chevrons are to increase the viewing cone of the LCD, each half of the chevron has a slightly different best angle, the two average together to keep the same average behavior over the wider span of view. The angles can affect the percieved sharpness of the edges of the fonts if the base resolution is low. If the base resolution is high, it wouldn't make much difference.

66.7.227.219 02:42, 29 September 2007 (UTC) Sunbear

Interesting. Can't see any difference in viewing cone but I think the sharpness is perceivable (they are dual so I can drag a test image across the divide). Odd though that they are otherwise identical; same model. Elliot100 13:34, 14 September 2006 (UTC) —Preceding unsigned comment added by 141.228.106.136 (talk)

Graphic formats

[edit]There are a few ways in which subpixel rendering can be used on existing graphic formats:

- Vector graphics naturally can be rendered in subpixels

- Bitmap-scaling algorithms can be made to use it

- A bitmap can be created in the first place optimised to a particular subpixel layout

But are there any graphic formats out there that are specially designed to support subpixel rendering? This might improve the rendering quality of certain kinds of images, if the same graphic file can be rendered in terms of the subpixel layout of each device it is rendered on (or in the lack of a discernable subpixel layout, plain pixel rendering). (For that matter, does JPEG already make this possible to any real extent?) -- Smjg 12:40, 10 April 2007 (UTC)

LCD only?

[edit]Would this technique not work on at least some CRT displays, for example an aperture grille? OS X's font smoothing seemed to work fine on my old trinitron. —Michael Z. 2007-06-13 23:14 Z

- Anti-aliasing works fine on all displays. However, cleartype-style subpixel rendering can work better on some screens (than antialiasing) under one specific condition: The computer knows the exact relationship between virtual pixel (as told to the graphics card) and physical pixel (on the screen). In the case of a CRT, knowing this relationship is all but impossible. (Notice all the CRT's having a mechanism in the monitor to shift the image left and right , and to stretch the image vertically and horizontally). In fact even LCDs using a analogue input don't fully benefit from the technology (although if the virtual pixel-physical pixel correspondence is very close to 1:1 it is concevelby possible that use of cleartype-style subpixel rendering will work somewhat better than generic anti-aliasing.

IBM invented subpixel rendering?

[edit]I don't think so. That doesn't sound quite right.

217.229.210.187 18:52, 4 August 2007 (UTC)

Actually, yes they did. Two engineers appled for the patent in 1988: Benzschawel, T., Howard, W., “Method of and apparatus for displaying a multicolor image” U.S. Patent #5,341,153 (filed June 13, 1988, issued Aug. 23, 1994, expired 3 Nov. 1998)

66.7.227.219 02:30, 29 September 2007 (UTC)Sunbear

Sub-pixel rendering makes it *worse* on some LCDs?

[edit]An ideal LCD would have a very small "blurring" optical filter on top of each pixel, such that the pixel looks uniformly white, rather than having 3 vertical coloured stripes. This does actually seem to be the case: I observed the LCD on my laptop (Thinkpad T60p) through a strong zoom lens (300mm macro lens, with x2 converter, OM2 camera, very small depth-of-field), and saw the following:

- Focus on surface of screen: white square pixels, with black borders. (screen door effect)

- Focus slightly (50um perhaps) deeper: pixels comprise 3 vertical bars of colour.

- Still deeper: white squares, but with more pronounced screen-door effect.

So, it seems that this LCD, at least, has a small optical blurring filter applied in front of it, to improve the quality. [That would also be why the human eye can't see the coloured sub-pixels, even though a 100um wide feature should be visible to those with good vision]

In such an LCD, sub-pixel rendering makes the image much worse; the effect is colour-fringing around each letter.

Can anyone confirm or explain this? RichardNeill 02:35, 14 September 2007 (UTC)

Um, you are seeing the effects of being out of focus. If there was a blurring filter on the lcd, then you wouldn't be able to focus through it, just like you can't focus through a frosted glass window. And if there was a blurring filter, sub-pixel rendering still wouldn't have a significant detrimental effect. The colour fringing should be invisible to the eye under most conditions. 122.107.20.56 (talk) 10:51, 1 May 2008 (UTC)

You are looking at the effect of the different layers of the LCD. There is an anti-glare filter on the surface. It does have the beneficial effect of being a reconstruction filter. It is not there to be so though... and it isn't to blur the color subpixels together, your eye does that just fine. This would not affect the subpixel rendering performance unless the filter was extremely blurry. I suspect that you have a non-standard order to the colors and have not used the optimizer to set-up the algorithm accordingly.

66.7.227.219 02:38, 29 September 2007 (UTC) Sunbear

Better smoothing algorithm

[edit]

I have created and uploaded two images which I originally drew to try to figure out a better way to do subpixel antialiasing on my LCD screen. Do these images seem like something that can be used for this page? The first image is on the right, and was drawn explicitly to demonstrate LCD subpixel positioning, and it compares normal greyscale antialiasing with raw subpixel antialiasing (the ugly kind :-) ) and a sort of "filtered" subpixel antialiasing, along with some color scales to let the user see the way the filter works, how the subpixels are positioned, and examples of Times-roman letter "A" in non-antialiased, greyscale-antialiased, raw-subpixel-antialiased, and filtered-subpixel-antialiased forms.

I kinda screwed up the "filter" as I drew this image, but it is based very loosely on something I read elsewhere on the web. If done right, each subpixel to be drawn gets its brightness cut to 50% of whatever it was originally, while the two neighboring subpixels are then set to 25% of the original value, and added to whatever else needs to be plotted and/or is already on the screen. For example, A single "white" dot 1/3 pixel wide plotted on a black background where a physical red subpixel is, would result in a dark blue (0,0,0.25) pixel to the left of a brownish-orange (0.5,0.25,0) pixel. The result is kind of grayish, but you can't exactly draw a 1/3 pixel wide white line. Make the line two or three subpixels wide and it turns mostly white. Either way, it has the effect of antialiasing a drawn image and seems to do well at getting rid of subpixel antialiasing color fringing once and for all.

This second image is a scaled-up version of the first, filtered to create a simulation of what an LCD monitor would do with the original image. Comments are appreciated - I would love to see someone implement this method in Linux/Freetype/Cairo etc.

Vanessaezekowitz (talk) 02:03, 30 June 2008 (UTC)

- Your method would help a bit to reduce color fringing for gray letters on a dark background. However, when done properly and with black letters on a white background, there isn't that much color fringing with subpixel antialiasing to start with. For a convincing smoothing effect, it's not enough to do just rounding to the nearest subpixel because the apparent brightness of a subpixel is different for R, G, and B. The standard brightness weighting values are something like 0.31:0.60:0.09. For example a subpixel (0,1,0) has a brightness of 0.69 at position 0 (center). But a subpixel (0,0,1) has a brightness of 0.09 at +1, and will appear much darker. To produce the same apparent brightness centered at position +1, you need to do something like (0,0.5,1)(1,0,0) - and then apply gamma corrections. The test patterns here use this trick and I'm pretty sure that Freetype et al. use similar approaches. (Disclaimer, I'm affiliated with that website)

- You make a good point. However, (1) isn't the eye's sensitivity to the three color components already accounted for in the design of a typical graphics card and monitor? That is, if I set a whole pixel with values (0.5,0.5,0.5) I get a nice medium gray shade, even though I didn't manually account for the variation in color sensitivity. All of that said, (2) it looks like Freetype doesn't use your method (or mine), but something much closer to the site I drew my idea from (http://www.grc.com/cleartype.htm). Vanessaezekowitz (talk) 06:45, 1 July 2008 (UTC)

- Hmm. Re (1), the answer is no. Try blue letters on a black background versus green on black and you will notice which one appears brighter. The exact luminance weights are a bit different than what I recalled from memory, but it is pretty standard for converting RGB images to monochrome. See e.g. Luma (video).

- Re (2): I dived into the source code of Freetype, and you appear to be right (from what I understand from the source code without extensive study). Freetype uses a simple smoothing filter over the subpixels to reduce color fringing. When I designed the test patterns, I noticed that representing a slanted line as RGB, GBR, BRG, ... gives a rather jagged appearance because a missing blue subpixel is much brighter than a missing green subpixel. And a BRG combination seems to be displaced to the right, which is why I used the weighting algorithm. I assumed that something I could come up with in an hour would probably be incorporated in Freetype, especially because the subpixels in Freetype did seem to be a bit more advanced than simply matching the glyphs to a triple-resolution (subpixel) bitmap. But it used a smoothing algorithm.

- Anyway, this is Wikipedia, which is not a place for original research. The article could give a bit more detail on how subpixel smoothing exactly works in existing implementations, though. Han-Kwang (t) 17:11, 1 July 2008 (UTC)

- You make a good point. However, (1) isn't the eye's sensitivity to the three color components already accounted for in the design of a typical graphics card and monitor? That is, if I set a whole pixel with values (0.5,0.5,0.5) I get a nice medium gray shade, even though I didn't manually account for the variation in color sensitivity. All of that said, (2) it looks like Freetype doesn't use your method (or mine), but something much closer to the site I drew my idea from (http://www.grc.com/cleartype.htm). Vanessaezekowitz (talk) 06:45, 1 July 2008 (UTC)

History and Practice

[edit]ClearType was not an implementation of the IBM invention, which nobody knew about until it was dug up after ClearType came out. When did Apple's version come out? After Microsoft? The original invention by a couple programmers in e-Books was clever but somewhat ad hoc, similar to what Gibson came up with, but with some attempt to filter. Then the problem was analyzed in MS Research, where it was demonstrated that in fact the method could be used on arbitrary image rendering (MS only uses it for fonts). The problem was then turned over to John Platt, an expert on signal processing and filter design, who created a much improved version that uses a specially designed FIR filter. 24.16.92.48 (talk) 14:11, 15 July 2008 (UTC) DonPMitchell (talk) 14:12, 15 July 2008 (UTC)

As an interesting historical note, Microsoft announced ClearType just 12 days after the IBM patent on subpixel rendering was allowed to lapse into the public domain for failure to pay the mataintance fees. DisplayGeek (talk) 19:40, 30 March 2010 (UTC)

Also of important historical note, Candice Brown Elliott, the founder of Clairvoyante and Nouvoyance, the inventor of the PenTile Matrix Family of layouts, first experimented with subpixel rendering on a red/green stripe electroluminescent panel in 1992 when she was an R&D scientist at Planar Systems in 1992. She invented the first of the PenTile layouts in 1993 and continued to work on them independently until she founded in Clairvoyante in 2000. DisplayGeek (talk) 19:44, 30 March 2010 (UTC)

Patent Issues

[edit]Note that FreeType with the subpixel rendering functionality shown in these examples cannot be distributed in the United States due to the patents mentioned above.

It can now. The patents expired in May 2010.

- You could have removed that just as easily as I did. Please remember to sign your posts by typing four tildes. Kendall-K1 (talk) 15:45, 3 March 2012 (UTC)

A bit short on filtering...

[edit]The article barely mentions what can be done about "color fringes". With text rendering, this is an absolutely crucial part - the subpixel rendering process before that is downright trivial. In practice, current font rendering systems apply a smoothing filter in subpixel direction to reduce fringes and apply gamma correction to reduce the "smudgy" look. I think this should be discussed either in this article or in Font rasterization.

Non-LCD devices

[edit]The article's lede says that subpixel rendering is for LCD and OLED devices—among other devices with fixed subpixels, plasma displays have them (at least according to the Wikipedia article). Someone posted a question on Talk:ClearType about printers. Anyone know if subpixel rendering has been used on any hard output devices? Bongomatic 05:35, 4 December 2011 (UTC)

Colour

[edit]I added colour as a visual aid to better convey the subtle matrix differences (feedback welcome per WP:COLOUR) - note white on black background was too invasive, and yellow and cyan are better as background due to contrast. I let this bold change bake a bit on PenTile matrix family, RGB (rejected), Quattron already. As this is a bold edit, please discuss.

As I was there I fixed unrelated issues (overlinking, links, etc) with this edit. The pair of images in the first section "background" is confusing without further explanation i.e. why an article only about additive colours has a subtractive colour example? why "RGB" is coloured with CMY? why when adding the colours we get black? I'm guessing it is meant to demonstrate an LCD with filters?, hence all the black pixels? That is not stated, is out of context, and in my opinion only confuses at that point in the article. Anyone want to remove or fix? Widefox; talk 12:42, 24 October 2012 (UTC)

Dubious

[edit]Nothing of what I know about display technology leads me to agree that an RGBW subpixel structure would have better colour reproduction than anything, let alone CMYK. (This looks more like original research--the false assumption that RGBW is "CMYK in reverse" and somehow has the same colour characteristics.) Rather, RGBW should have the exact same gamut as RGB, only with less saturation in colours with the most brightness. --GregE (talk) 03:50, 14 December 2012 (UTC)

- I agree - and as this has not been challenged or references added to the parenthesis in question, I'll just remove it. Tøpholm (talk) 12:05, 4 August 2016 (UTC)

Subpixel rendering was first brought to public attention by Microsoft in Windows XP as ClearType?

[edit]I thought that subpixel rendering was available in Adobe Acrobat / Reader earlier than in Windows XP. In the Acrobat I think it was offered during initial setup by default. --pabouk (talk) 09:23, 8 January 2014 (UTC)

Criticism of subpixel rendering

[edit]There should be some mention of its disadvantages. Maybe a whole section dedicated to it, even. Though I'm biased against ClearType and its kind. •ː• 3ICE •ː• 08:29, 29 November 2014 (UTC)

If that section comes about, one could mention that subpixel rendering makes it hard to get clean enlarged screenshots of rendered glyphs because of the color fringes. For example, one is taking screenshots of an app's UI to produce documentation, and enlarges a certain area for detail, and all the text has visible colored edges. It would be handy if, when taking a screenshot, that there was an option to exclude the subpixel rendering and switch to normal antialiasing. — Preceding unsigned comment added by 2001:569:7510:A000:1898:E49:4F20:E01D (talk) 01:44, 4 June 2016 (UTC)

Subpixel Rendering Fractal Program

[edit]I have designed a fractal program that generates imagery with the option of oversampling with RGB or BGR subpixel rendering. It runs on any modern web browser. You can find this at www.fractalartdesign.com/fractal

The oversampling options are "none" (1x1), "good" (2x2), "detailed" (4x4), and "fine" (8x8) under the "Quality" menu. Exceeding 8x8 oversampling provides no further gain in clarity. When subpixel-rendering is engaged under the "Render" menu, the oversampling adjusts to 6x4 and 9x8 to distribute it over the three primaries, effectively tripling the horizontal resolution of the fractal. It is not engaged under the "none" or the "good" options, as applying subpixels in 3x1 or 3x2 oversampling causes marked color distortion.

It does make a difference in the detail of the rendered fractal on an LCD display. Subpixel-rendered images seem sharper. When rendering for print or publication, this option must be turned off to avoid the fringing artifacts.

I attempted to upload samples for consideration in the article, but Wikipedia rejected the uploads as "unknown" in appropriateness.

Gsearle5 (talk) 20:21, 23 September 2016 (UTC)

General Parametrics

[edit]I'm not sure if this quite fits in with this topic, but it is very interesting in itself. I found references to an electronic slide viewer called VideoShow and its accompanying software, PictureIt, by a company called General Parametrics. One of the selling points was the control over the horizontal positioning of display elements by apparently addressing the individual phosphors on a CRT display, effectively increasing the horizontal resolution. Here are the news articles and reviews:

- Portable display system produces high-level presentation graphics, Mini-Micro Systems, July 1984

- From Pixels to Microdots, Byte, September 1984

- VideoShow Hones Your Image, Popular Computing, February 1985

--PaulBoddie (talk) 23:06, 15 August 2022 (UTC)

- The Byte article is describing a higher resolution horizontal signal and has nothing to do with subpixel resolution. I think you are confused by terms in that article as at the time the main technological hurdle was compression to use minimal memory, the analog signal was no problem.Spitzak (talk) 03:06, 28 September 2022 (UTC)

- I acknowledge the technique of minimising memory usage to represent the image, but the rather superficial Byte article talks about pixel triads. Similarly, the Popular Computing article also mentions triads, noting that "MacroVision, however, treats each triad not as a unit but as three separately addressable microdots." So, aside from compression or other techniques concerned with representing an apparently high resolution image in restricted amounts of memory, there is also a technique involving the utilisation of individual phosphors. That latter technique is what I considered pertinent to this article. As for the analogue signal characteristics, I don't know what the signal being "no problem" is meant to say, but you can obviously increase the dot clock frequency. But on a colour display with a dot pitch that doesn't support a higher resolution, what does that do other than address subpixels directly? The fact that the Popular Computing article also talks about colour control indicates that they did more than just increase the frequency: that alone, as my home computer did when used with a television set decades ago, wouldn't have given such a level of control. --PaulBoddie (talk) 11:09, 28 September 2022 (UTC)

Another article:

- MacroVision, Computers & Electronics, December 1984

--PaulBoddie (talk) 21:44, 4 October 2022 (UTC)

Article needs a rewrite to remove incorrect assertions that it's an LCD thing and not as applicable to CRTs

[edit]It's simply not true. Videogame artists have been taking advantage of subpixel rendering for decades. This is why SNES and PS1 era art looks so much better at 1:1 native resolution than blow up as raw bitmaps of square pixels. It's why people prefer low resolution displays like CRTs for those games. In fact, for years I was fascinated by Windows XPs "ClearType" on my CRT monitors. This was technology designed for CRT long before LCD monitors became commonplace. The fact that it also works on LCD, and everyone now uses LCD, doesn't imply it's for LCD only. The article would be more accurate if it simply said LCD/CRT without vaguely claiming there's a difference in their subpixel rendering abilities. There isn't. Habanero-tan (talk) 00:59, 26 September 2022 (UTC)

- Agreed. The article describes the topic as "rendering pixels to take into account the screen type's physical properties", and if you look at the General Parametrics devices mentioned above, that is apparently precisely what they do... with a CRT. Moreover, sub-pixel anti-aliasing was featured in the Acorn outline font manager to "increase the positioning resolution" of text in small font sizes, this largely being done independently of the display technology.[1] Arguably, using specific characteristics of the display for positioning is just specialisation of more general subpixel rendering techniques. --PaulBoddie (talk) 23:18, 27 September 2022 (UTC)

- No, you are confusing anti-aliasing with subpixel rendering. Spitzak (talk) 02:58, 28 September 2022 (UTC)

- I am aware of the difference between the two techniques, and I will admit that Acorn's implementation did just anti-alias the text at different subpixel positions but limited itself to controlling the intensity of whole pixels, as opposed to employing a model that considered the display pixel structure. However, I think it is important to reference the antecedents of the described technique, which the first picture on the page tries to illustrate without such additional context. And as noted elsewhere, the microdots concept in General Parametrics' products looks rather more like a direct predecessor of the described technique, if not an actual earlier example. --PaulBoddie (talk) 11:24, 28 September 2022 (UTC)

References

- ^ Raine, Neil; Seal, David; Stoye, William; Wilson, Roger (November 1989). The Acorn Outline Font Manager. Fifth Computer Graphics Workshop. Monterey, California: USENIX Association. pp. 25–36.

- C-Class Technology articles

- WikiProject Technology articles

- C-Class electronic articles

- High-importance electronic articles

- WikiProject Electronics articles

- C-Class Computing articles

- High-importance Computing articles

- C-Class software articles

- High-importance software articles

- C-Class software articles of High-importance

- All Software articles

- C-Class Computer hardware articles

- Low-importance Computer hardware articles

- C-Class Computer hardware articles of Low-importance

- All Computing articles

- C-Class computer graphics articles

- High-importance computer graphics articles

- WikiProject Computer graphics articles